Classification with Confidence

Fine-Tuning LLMs for Better Classification

When building text classification systems, a key question emerges: how much can LLM fine-tuning improve classification accuracy compared to using base models with prompt engineering alone?

This project demonstrates the practical application of LLM fine-tuning to align a classifier with specific business requirements. It's not groundbreaking research—it's a hands-on exploration of techniques that prove valuable in production systems.

The Problem: When to Trust AI Decisions

In production AI systems, especially those involving human-in-the-loop (HITL) workflows, a critical question arises: when should the system make autonomous decisions, and when should it escalate to human review?

Confidence scoring provides the answer. By understanding not just what the model predicts but how confident it is in that prediction, we can:

- Route high-confidence predictions straight through

- Flag low-confidence cases for human review

- Optimize the balance between automation and accuracy

- Continuously improve by learning from the cases humans review

The Approach: Fine-Tuning for Alignment

We took a straightforward classification task and compared two approaches:

- Base model with prompt engineering: Using GPT-4o-mini out of the box

- Fine-tuned model: Training the same model on labeled examples

The results speak for themselves:

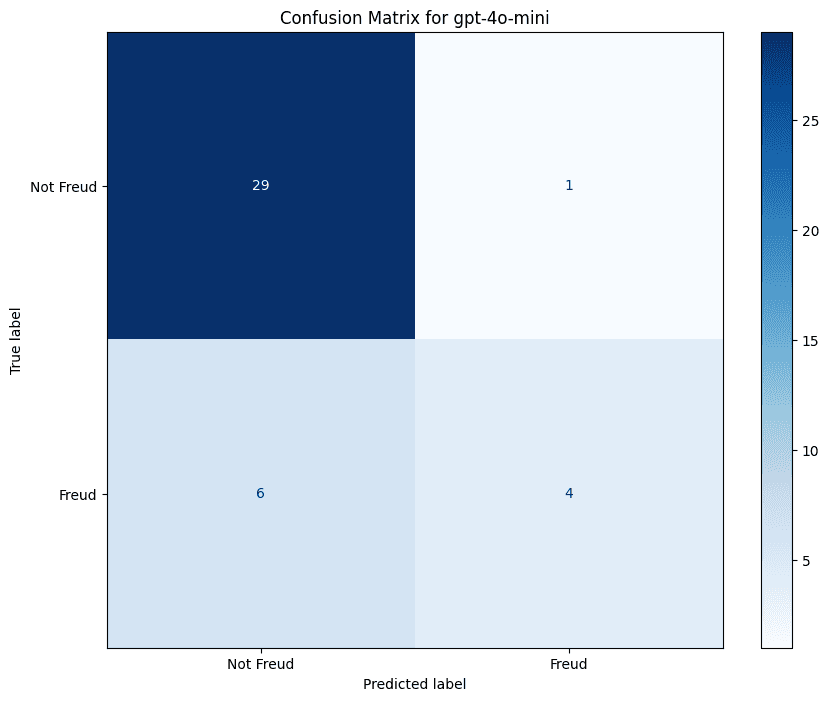

Before Fine-Tuning

The base model shows decent performance but struggles with false negatives—missing positive cases that should be caught.

After Fine-Tuning

The fine-tuned model demonstrates significantly improved accuracy, particularly in correctly identifying positive cases (8 correct vs 4 in the base model).

Why This Matters

LLM fine-tuning is a high-value skill in the AI employment market because it demonstrates:

- Understanding of the complete ML lifecycle, not just API consumption

- Ability to align AI models to specific business requirements

- More sophisticated approach than prompt engineering alone

- Production-ready thinking about model performance and reliability

These techniques enable:

- Customization of models for specific business requirements

- Improved accuracy on domain-specific terminology

- Reduced false positives and false negatives

- Confidence scoring integrated into adaptive systems

Fine-Tuning as Part of RLHF Systems

Fine-tuning a classifier can be a key component of larger RLHF (Reinforcement Learning from Human Feedback) systems. When humans review AI decisions and provide feedback, that feedback can be used to fine-tune the model, creating a continuous learning loop:

- AI makes predictions with confidence scores

- Humans review low-confidence or flagged cases

- Feedback is captured as training data

- Model is fine-tuned to align better with human decisions

- System improves over time, requiring less human review

This is how you build self-evolving AI agents that get smarter through human feedback—a core component of production-scale RLHF systems. Fine-tuning isn't just a one-time improvement; it's a mechanism for continuous alignment with human judgment.

Technical Implementation

The project includes:

- Training pipeline: Scripts for fine-tuning OpenAI models

- Evaluation framework: Tools for measuring classification performance

- Confidence analysis: Methods for extracting and using confidence scores

- Comparison tools: Side-by-side evaluation of base vs fine-tuned models

This represents the kind of practical ML engineering that bridges research and production deployment.

Production-Ready AI Thinking

What separates demos from production systems is attention to details like:

- Model alignment: Fine-tuning to match specific requirements

- Confidence scoring: Knowing when to trust automated decisions

- Continuous evaluation: Measuring performance on real-world data

- Human-in-the-loop integration: Designing for human oversight where needed

These aren't just academic exercises—they're the foundation of reliable AI systems that deliver business value.

Open Source

The complete project is available on GitHub, including training scripts, evaluation tools, and example datasets. It's designed to be a practical reference for anyone implementing similar classification systems.

This work emerged from real-world challenges in building production AI systems, and we're sharing it to help others tackle similar problems.