How AI Agents Do Things

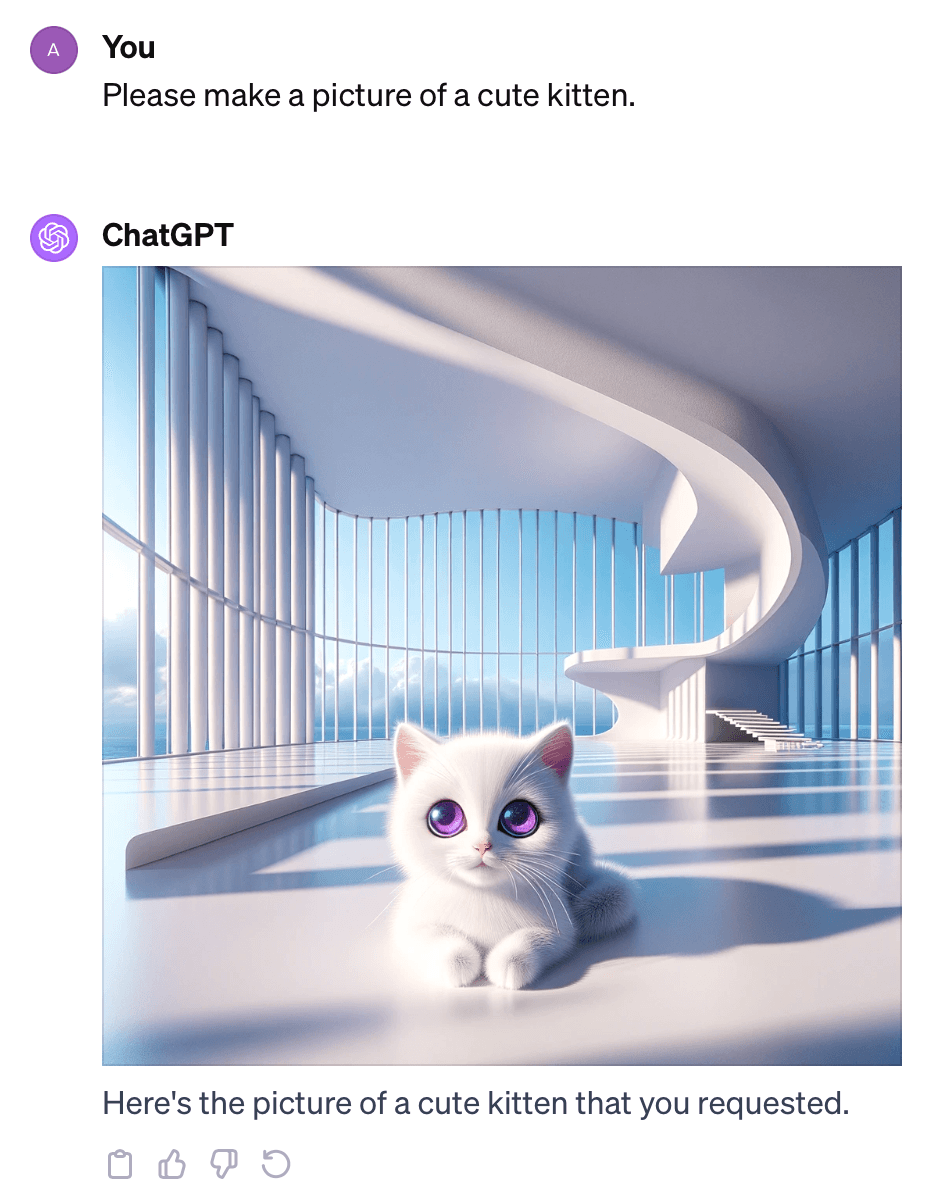

I needed a picture of a cute kitten to post on the Internet, since we all have to do our part or else there would be a shortage. I asked ChatGPT to make a picture of a cute kitten. And it made one.

How did this AI model somehow learn to make a picture of a cute kitten? Did the researchers at OpenAI somehow 'upload' the kitten-drawing skill set into GPT-4, like Neo learning Kung Fu in The Matrix by plugging a cable into the back of his head?

Not really. The answer is simpler, and it's a preview of a new type of software solution.

Give tools to the AI model

Imagine you’re conversing with an airline assistant who can type on their terminal at superhuman speeds to fetch the latest information to answer your questions.

This scenario is akin to how it works when we speak with an AI agent that can look up facts or draw pictures. Imagine the agent pausing the conversation to use a tool to look up the latest data or perform a quick calculation. Or maybe it uses a different tool to draw something, or send a message, or create an order. Perhaps it sets some long-running process in motion.

These tools that the model can use are called "actions" in general for AI agents. Actions are computer programs that you give to the agent that it can use when necessary. In the OpenAI API, "functions" are the basic building block for actions. In other systems like AWS Lex, you implement actions as Lambda functions. But it always follows the same basic model of the agent application giving tools to the AI model.

How does the AI model use the tools?

But how does that work? How does the AI model execute the action for you? It must be complicated to somehow teach an AI model how to draw a picture or look up an order in a database? Do you have to re-train the entire AI model every time you plug in some new action?

The answer to all these questions is simple and elegant: You perform the actions yourself. The AI model doesn't directly run any computer program, it asks you to do it and report back. That means that your action can access anything you can, using whatever programming language you want, and you can give it whatever resources it needs.

The key concept is that you tell the AI model that the tools are available and how to use them, and it makes decisions about what tools to use and when and how. But the AI model doesn't do things directly. If the user asks the AI agent to make a picture of the kitten, then the AI model is responsible for deciding that this would be a good time to draw a kitten. It will respond to the AI agent application: It's time to draw a kitten, so pull out that "make image" tool that you told me about and use it to make a picture with the prompt, "A kitten." The AI agent application is then responsible for using some tool to draw a kitten. That tool happens to be an AI model also, but the point here is that the large language model that you're conversing with through the AI agent application does not directly make the image itself. It orchestrates.

The sequence

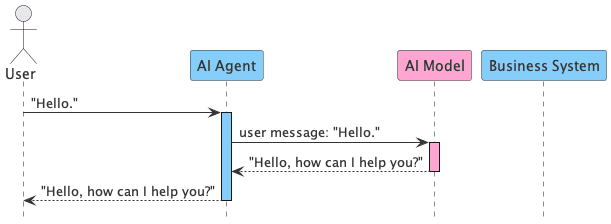

Here's what the process looks like. Normally, a question and answer would look like this:

The agent relays the user's "Hello" message to the model, the model responds, and the agent displays the message for the user in whatever chat UI they're using. The business system is not involved.

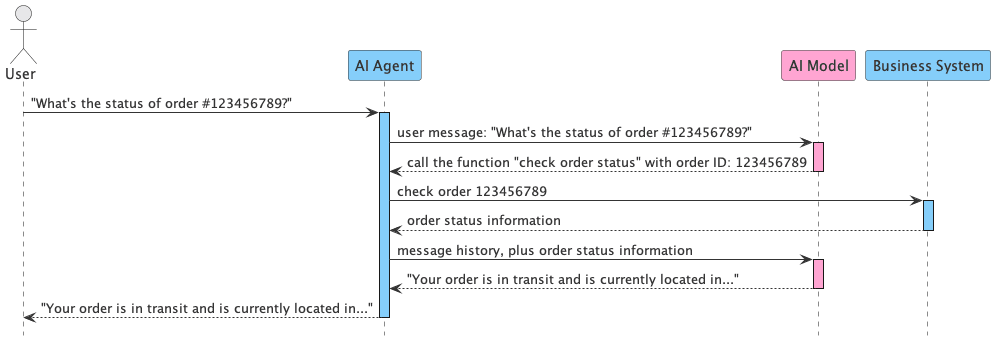

If the user asks a question and the model decides to call a function, the sequence looks a little different. Here's an example of the user asking for the status of an order, which will require a lookup:

The AI model tells you the name of the function to run and the parameters to use. The agent must execute that function, then report back to the agent API with results.

You end up calling the API two times instead of once. You “augment” the second API request with the results of the function call that you perform yourself.

A basic request and response

Let's see what the API requests and responses look like, without any code. We'll assume that we're working with an OpenAI-style API where the actions are handled through "function-calling".

Here's what the simple request from the agent application to the OpenAI API looks like in the first sequence above:

request from application to OpenAI

conversation history

The OpenAI API send a response that includes the next message in the conversation history, from the AI agent. Those are labeled with the role "agent".

response from OpenAI to the application

Now let's give it tools

As the application, we're responsible for managing the conversation history. OpenAI doesn't maintain any sort of session state on their side. Every time we send the next request in a conversation to the OpenAI API, we must provide the conversation history. We do that by taking the previous conversation history and adding the latest response from the AI, and the latest message from the user.

This time, we'll also give it a couple of functions that it could call if it decides that it should. We add a list of those functions to the request along with the conversation history. Each function is a description to the AI model that tells it the name of the function, what it's for, and how to call it. Different functions require different parameters, and you use this description to tell it that when it wants to look up an order ID, it needs to provide an order ID. Or that when it wants to create a new order, it needs to provide the customer information and the order items.

There's a structured format (JSON) for describing the functions, but let's skip the code in this simplified diagram for the second sequence above:

request from application to OpenAI

conversation history

functions

When the AI API gets that request, what's in the request is the only thing it knows about the conversation history.

The AI model can see that it has some tools. Should it use one? Remember that the model's job is to make decisions about when to use the tools but not to use them directly. In this case, the user is asking a question about looking up an order. The model can see that it has a tool for doing that. Should it do that now?

It can also see that the "check order status" tool says that it requires an order ID. But, the conversation doesn't include any order ID.

So, instead of using the "check order status" tool, the model asks for the information that it would need to use that tool:

response from OpenAI to the application

The user then responds with the order ID:

request from application to OpenAI

conversation history

functions

Now the model looks at the situation and concludes that the user is asking for the status of order ID 123456789. It looks at its list of available functions and sees a function called "check order status" that requires an order ID. Hey, look! We have an order ID! The planets are aligning!

This is the critical part that the AI model plays in taking action on behalf of the user: It reasons about what actions to take, when to take action, and how to take action.

In this case, it can see that the user made a request that an available function can satisfy, and it sees that it has the required parameters. And so, it makes the decision to take action.

The agent asks you to take an action

The AI model will check on that order ID by responding like this:

response from OpenAI to the application

As the agent application, performing the task is now your job. You must execute that function. Your code then queries your business system to get raw order details:

orderId: 123456789

status: 046-IN-TRANSIT

lastUpdate: 1648762800000

location: OAKLAND, CA

Great, you have some raw data. But you can't show that ugly stuff to the user.

Use the AI to describe the results

To generate an AI response to the user, you send a second API request to the OpenAI API. Like before, you're sending a conversation history with the request, and that's the only way the AI model knows anything about what's happening. In that history, there's a message from when the user asked a question, a note that the model decided to call a function, followed by the function call results.

request from application to OpenAI

conversation history

functions

The AI model will take the value that you gave it and use that to generate the next message in the conversation:

response from OpenAI to the application

Division of responsibilities

Managing the conversation history is our prerogative as the application, which gives us Orwellian control over the past and the present. We can use that to insert things like the status information for an order, proprietary facts, or anything potentially useful for the model as it generates responses.

We're also executing the functions, eliminating concerns about the complexities of deploying code into the AI API to run it.

We're doing all the real work ourselves when we use function calling, but we're using the reasoning ability of the AI model to decide what tools to use, when to use them, and how to use them.

Application

- Manage conversation history

- Execute functions

AI Model

- Generate text

- Decide what tools to use, when and how

The full application stack for an AI agent looks like this:

AI Agent Application Stack

AI agent application

existing business system

AI model

In this diagram, an existing business system (bottom left) now supports a new AI agent user interface, managed by the AI agent application. The AI agent code runs separately from the business system and integrates with it through API calls, database queries, or whatever makes sense. The agent uses the large language model (LLM) to generate text for the conversation, using function calling to access the existing software system.

See it for yourself

Go and create an OpenAI secret key and open this Colab notebook, where you can see it in action.

Cost

The only costs will be for the OpenAI API, and we're going to use GPT 3.5 Turbo, so it's cheap.0 You would have to work hard at it to spend more than a few pennies on this demo.

Requirements

- OpenAI API key: Visit your API keys page to configure an API key that you'll use for the requests.

- Google Colab account [FREE]: Create a free Colab account if you don't already have one. This notebook is so simple that you can run it for free.

Setup

First, open the (new) "secrets" panel in Colab and create a new secret called openai, with your secret OpenAI API access token as the value.

Then, run the first cell of the notebook to use pip to install the OpenAI SDK for Python.

Now you're ready to run the code.

Run it

Run the second cell in the notebook and it will use the secret that you set up to send a request to the OpenAI API for GPT 3.5 Turbo. Note that the code provides a function list with a check_order_status function.

As we discussed above, you don't send an actual function to OpenAI. You don't send them any code. You describe the functions that exist:

function_list = [

{

"name": "check_order_status",

"description": "Check the status of an order using its ID",

"parameters": {

"type": "object",

"properties": {

"order_id": {

"type": "string",

"description": "The order ID."

}

}

}

}

]

The AI model will see that, and it will keep in mind that it has a function that takes an order ID for checking the order status. If anyone asks for something like that, then it knows how. It's like you're making a contract with the AI about how to handle user requests. A promise that you will help whenever it needs.

You'll see from the output in the notebook that the first response from the AI will tell your code to run a function. Your code runs a (dummy) function and then sends the second request to the AI model. And then, the model responds by generating text you can relay to the user.

More information

You can find more examples of function-calling with the OpenAI API in the OpenAI Cookbook.0

You can also see an example of a text classifier built using function calling for the article about maximizing profit, not intelligence, here in this Colab notebook or on GitHub.

Not just a friendly voice

To wrap it up, think of the AI agent as a super-helpful airline desk assistant, but with some extra tricks up its sleeve. It's not just there for a chat – it can quickly handle tasks like booking travel or finding flight details. And it does all this quicker than even the fastest-typing airline staff. While this method requires an additional step, the payoff is substantial: a live connection to the real world. AI models that can do things for you, which is the definition of an AI agent.

The artificial intelligence industry is increasingly focused on integration since an AI agent is much more valuable if it can do things you care about in the systems you already use. One of the key ways to integrate your existing systems with the latest and greatest AI models is to give them a toolbox for accessing your system.

We can help

We have experience integrating enterprise business systems with AI models to create AI agents that can report to you about metrics, notify you about alarms, or trigger actions in your system like creating orders or other business use cases. We also have experience reliably operating mission-critical software features over the long term. If you're looking for help with something like that, please get in touch.