LangChain by Example

You have problems, and you know that AI can help solve them. You also know that you need software to get you there. But automating AI can be a challenge. Software development was hard enough before the machines started talking back and having opinions! Now, it's even more difficult because the components you're building on can be unpredictable, producing different results at different times.

That's where we come in. Our team specializes in making these advanced tools accessible. One powerful tool we're excited to share with you is LangChain. LangChain helps you stay focused on solving your problems and creating effective solutions, rather than getting bogged down in the complexities of managing the underlying AI technology.

We'd like to share some examples of practical solutions we've found using LangChain:

Use AI models from any company's AI API

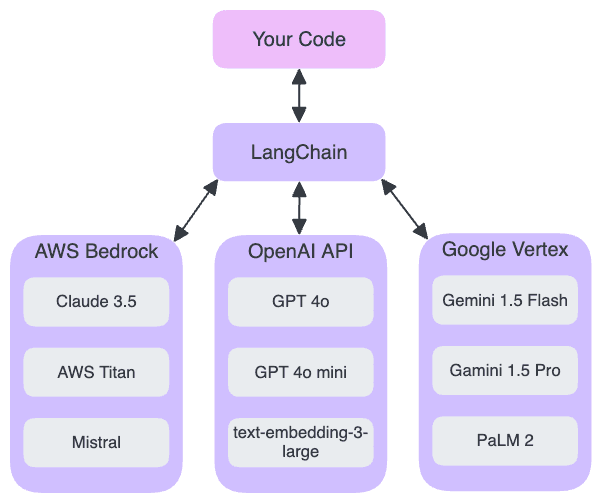

Using AI models on-demand as interchangeable components within larger software architectures, connecting to various AI API providers.

Two Models, One Task

Creating multi-step processes that break down complex tasks, allowing the use of more cost-effective AI models while achieving better results – a key principle in leveraging AI for practical solutions.

Safeguard Retry Loops

Building robust AI components that can validate LLM output and retry with hints, improving reliability.

Use an LLM to process data

Feeding large volumes of data to an AI model for processing. For things like analyzing tens of thousands articles or phone call transcripts or medical transcriptions.

Structuring Data

Using LangChain output parsers to extract structured data from datasets. In this example: Quickly searching a large database of raw recipe text, looking for something we can make with the one avocado that we have.

These are just a few examples of what's possible. Whether you're tackling business challenges, working on academic research, or exploring AI as a hobby, LangChain can help you harness the power of language models more effectively.

With so many approaches available for building AI applications, you might wonder: Why LangChain? Let's explore what makes this tool stand out and how it can streamline your AI development process.

Why LangChain?

We didn't start with LangChain. Our initial approach to creating "agentic" applications (a term with as many definitions as developers) was bare-bones: Python code for LLM API requests, no frameworks. This gave us control and deep understanding, but it came with challenges.

As we built more sophisticated applications, the complexity grew. We created systems to manage LLM chat histories, switched to LiteLLM to support various AI APIs, and wrote increasingly complex code to parse LLM responses. Soon, we were spending as much time on infrastructure as on solving our core problems.

We knew we needed a better solution, so we conducted extensive research into various frameworks. We evaluated options like CrewAI, AutoGen, and others.

After careful consideration, we chose LangChain. It stood out for its ability to provide power through flexibility and control, while abstracting away many details we didn't want to handle directly. Crucially, LangChain doesn't impose rigid opinions about application architecture, unlike some alternatives that prescribe specific paradigms (such as CrewAI's concept of building applications with "crews" of "agents" with personas).0

What is LangChain?

LangChain is a framework for building applications with LLMs. It handles prompts. It manages logic chains. It parses outputs. With LangChain, you focus on AI workflows for solving your use cases. You don't have to mess with API calls or the differences between APIs. It's a simple abstraction that's easy to understand. It's powerful through its flexibility. It's well used and maintained by masses of other developers. And it works.

Let's look at some examples of ways you can use it to solve problems.

Example 1: Use AI models from any company's AI API

OpenAI gives you example code for calling their API. It's easy. Really.

It starts to get harder when you need to connect the same code to more than one AI API. It's not just a matter of changing a URL and an API key: Different APIs have different input and output structures. Even if you use something like AWS Bedrock that can connect to many different models, your code has to account for some models accepting a top_p parameter (Anthropic Claude, Mistral AI, Meta LLama)0 while others use topP (Amazon Titan, A21 Labs Jurrasic-2). Some support top_k and some don't. Some support seed and some don't. Some have different request formats, with different ways of defining "functions" or "tools".

Who will keep up with all of it? You shouldn't. That's what LangChain is for: You can connect your code through LangChain to many different AI APIs. Your code can worry about its problems while LangChain worries about those details.

LangChain isn't the only option if all you want is to connect your same code to many different AI APIs. Another great option is LiteLLM, which makes every AI API look like the OpenAI API so that you can use the same code for everything. That strategy worked for us — to a point. When we started writing lots of code for managing conversation histories and parsing model outputs we realized that we were reinventing wheels when we should have been focused on solving problems. LangChain seemed like the best option for abstracting those details without forcing us to conform to a framework's paradigm.

Here's a minimal LangChain AI API request:

from langchain_openai import ChatOpenAI

model = ChatOpenAI(model_name="gpt-4o")

model.invoke("Is the Sagrada Família located in Barcelona?").content

LangChain takes care of doing this:

request from application to OpenAI

response from OpenAI to the application

Simple!

If you do this same request with the OpenAI API then you get the response in message = completion.choices[0].message.content. LangChain encapsulates those details so that you can think about what you're doing instead of working to accommodate the API.

That's useful because LangChain requests to other AI APIs, like AWS Bedrock, Anthropic, or Google Vertex look just like LangChain requests to OpenAI. This example of sending a request to Claude 3.5 Sonnet looks exactly like the example above that uses OpenAI. Even though the APIs and models have different details.

from langchain_aws import ChatBedrock

model = ChatBedrock(

model_id="anthropic.claude-3-5-sonnet-20240620-v1:0",

)

model.invoke("Is the Sagrada Família located in Barcelona?.").content

request from application to AWS Bedrock

response from AWS Bedrock to the application

Sorting out the details of the differences between the different AI APIs and models could consume hours of your time up front. And complications related to managing those details could cost you even more time and delayed progress in the future. Shifting responsibility for those details into LangChain keeps you focused on your goals.

Follow along and run the examples

You can experience everything in the article yourself, for free, without setting up anything on your computer. All the example notebooks are hosted on GitHub. The quickest way to run them is to go to this Google Colab notebook for the first example.

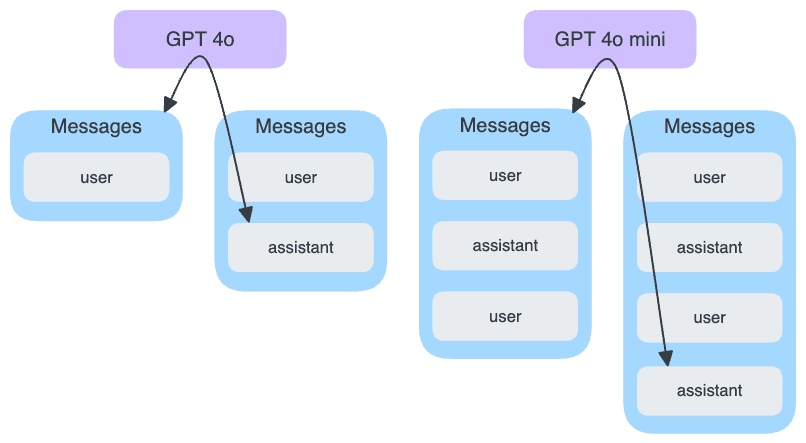

Example 2: Two models, one task

You can use the capability of connecting to many different models to use the right model for the right job within a larger application.

As an example, what if what we really want from the previous examples is a yes/no classification? The model has a lot to say, but really all we want is a yes or a no.

We know that adding instructions to the prompt to tell the model to give us only a "yes" or "no" will generally hurt the accuracy of the answer.0 So, to get the best accuracy, we want to let the model speak freely. Then we need to categorize the answer as either a yes or a no. We can use another LLM call for that.

But summarizing an answer into either a "yes" or a "no" is pretty simple. We probably don't need an expensive model for that. That's how LangChain helps us here: We can use an expensive model for the first question where the accuracy really matters, and then we can use a cheaper model for the final classification.

An AI conversation is a message list at heart, and we can pass a message list around from one model to another. We can pass in our user message (the prompt with the question) and a large model like GPT 4o can answer the question as accurately as possible using chain-of-thought prompting or other techniques. We add that response to the message history as an assistant message. Then we can append a follow-up question to that message list and pass it to a different model. The final assistant message will be generated by the second model.

It's straightforward to implement ideas like that with LangChain. Here's the code for implementing the above diagram to use GPT 4o for the answer and GPT 4o mini for the yes/no classification:

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessage

chain_1 = ChatOpenAI(temperature=0.7, model_name="gpt-4o")

chain_2 = ChatOpenAI(temperature=0, model_name="gpt-4o-mini")

question = "Is the Sagrada Família located in Barcelona?"

messages = [

HumanMessage(

content= \

f"Question: {question}\n" + \

"Please provide a detailed explanation before answering whether the statement is true or false."

)

]

detailed_response = chain_1.invoke(messages)

detailed_answer = detailed_response.content

print("Detailed answer:", detailed_answer)

messages.extend([

AIMessage(content=detailed_answer),

HumanMessage(

content= \

"Based on the previous messages, categorize the response strictly as either 'Yes' or 'No'."

)

])

yes_no_response = chain_2.invoke(messages)

yes_no_answer = yes_no_response.content.strip()

print("Yes/No Answer:", yes_no_answer)

request from application to GPT 4o

response from GPT 4o to the application

request from application to GPT 4o mini

response from GPT 4o mini to the application

This two-step pattern gives us the best of both worlds: High accuracy from a large and expensive model, and data structuring from a smaller and cheaper model.

LangChain of course makes it easy to try different APIs and different models. Here's the same two-step sequence, but using Claude 3.5 Connect and Llama 3

from langchain_aws import ChatBedrock

from langchain_core.messages import HumanMessage, AIMessage

chain_1 = ChatBedrock(model_id="anthropic.claude-3-5-sonnet-20240620-v1:0")

chain_2 = ChatBedrock(model_id="meta.llama3-8b-instruct-v1:0")

question = "Is the Sagrada Família located in Barcelona?"

messages = [

HumanMessage(content=f"Question: {question}\nPlease provide a detailed explanation before answering whether the statement is true or false.")

]

detailed_response = chain_1.invoke(messages)

detailed_answer = detailed_response.content

print("Detailed answer:", detailed_answer)

messages.extend([

AIMessage(content=detailed_answer),

HumanMessage(

content = \

"Based on the previous messages, categorize the response strictly as either 'Yes' or 'No'.")

])

yes_no_response = chain_2.invoke(messages)

yes_no_answer = yes_no_response.content.strip()

print("Yes/No Answer:", yes_no_answer)

You could even send one request to OpenAI and the follow-up request to AWS Bedrock. LangChain handles the details so that you can focus on your solution.

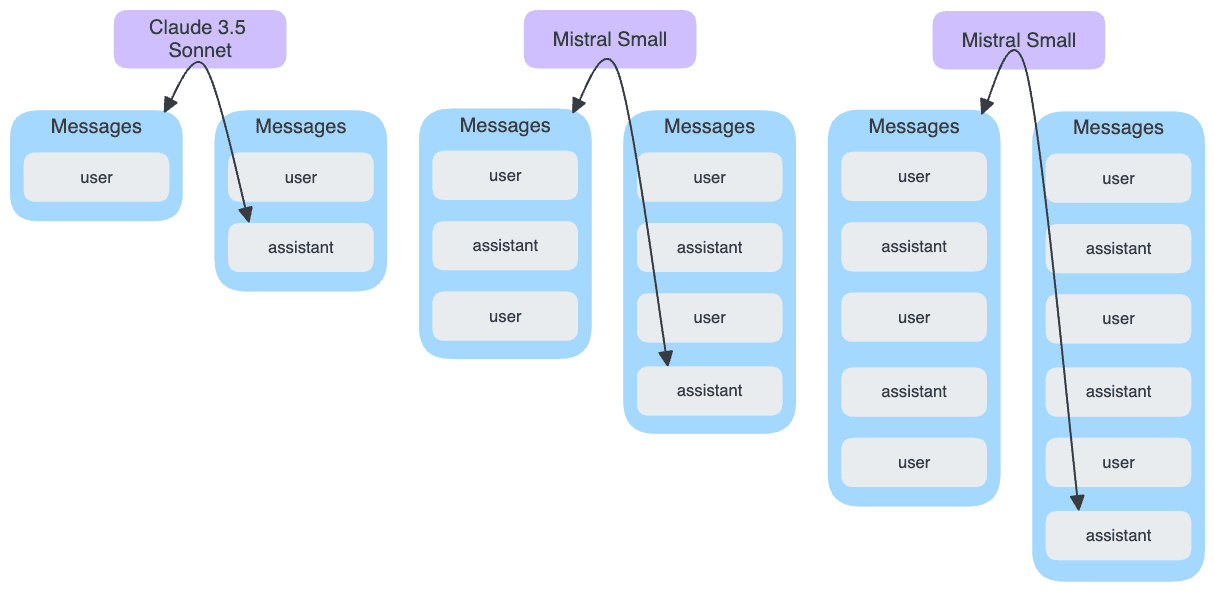

Example 3: Add a safeguard retry loop

LLMs can be talkative. You just want a "yes" or a "no" but they'll say things like, "The answer is: No."

We have seen how you can add a subsequent LLM call to classify a wordy answer into the "yes" or "no" that we're looking for. But, what if the second LLM call produces something other than "yes" or "no"? As you work with smaller and cheaper models you'll need to work harder to meet in the middle with them. There are lots of tools for that, and one of the most important is the retry loop as a safeguard.

After we call the LLM the second time to get a "yes" or a "no", we can check to make sure we got a valid answer. If not, then we can loop back and ask the second LLM a follow-up question.

from langchain_core.messages import HumanMessage, AIMessage

from langchain_aws import ChatBedrock

chain_1 = ChatBedrock(model_id="anthropic.claude-3-5-sonnet-20240620-v1:0")

chain_2 = ChatBedrock(model_id="mistral.mistral-small-2402-v1:0")

question = "Is the Sagrada Família located in Barcelona?"

cot_response = chain_1.invoke([HumanMessage(content=question)])

detailed_answer = cot_response.content

message_history = [

HumanMessage(content=question),

AIMessage(content=detailed_answer),

HumanMessage(

content = \

"Based on the previous messages, categorize the response strictly as either 'Yes' or 'No'."

)

]

max_retries = 3

retry_count = 0

while retry_count < max_retries:

yes_no_response = chain_2.invoke(message_history)

yes_no_answer = yes_no_response.content.strip().lower()

if yes_no_answer in ["yes", "no"]:

print(f"Yes/No Answer: {yes_no_answer.capitalize()}")

break

else:

print(f"Attempt {retry_count + 1}: Invalid response '{yes_no_answer}'. Retrying...")

retry_count += 1

if retry_count < max_retries:

message_history.append(HumanMessage(content="Your previous response was not valid. Please answer ONLY with 'Yes' or 'No', without any additional text or punctuation."))

if retry_count == max_retries:

print("Failed to get a valid Yes/No answer after maximum retries.")

Now, we can use really cheap models that aren't so reliable. But it's okay beause we can keep retrying until it works. In this example, Mistral Small (24.02) had trouble but it eventually got there after a couple of retries. And, since it's such a cheap model, that was still cheaper than doing one call with the more-expensive model.

request from application to Claude 3.5 Sonnet

response from Claude 3.5 Sonnet to the application

request from application to Mistral Small (24.02)

response from Mistral Small (24.02) to the application

request from application to Mistral Small (24.02)

response from Mistral Small (24.02) to the application

Example 4: Use an LLM to process data

This example processes a HuggingFace dataset contains thousands of news articles from CNN. Let's use an LLM to screen for articles about space exploration missions:

from datasets import load_dataset

import random

from tqdm import tqdm

from langchain_openai import ChatOpenAI

from langchain.prompts import PromptTemplate

# Load the dataset

dataset = load_dataset("AyoubChLin/CNN_News_Articles_2011-2022", split="train")

# Sample a percentage of the data (e.g., 1%)

sample_size = int(len(dataset) * 0.01)

sampled_data = random.sample(list(dataset), sample_size)

# Use GPT-4 for the model

model = ChatOpenAI(model_name="gpt-4o-mini")

# Create a prompt template

prompt = PromptTemplate.from_template(

"Does the following news article discuss space exploration missions? Answer with 'Yes' or 'No':\n\n{text}"

)

chain = prompt | model

# Process sampled data

for item in tqdm(sampled_data, desc="Processing articles", unit="article"):

text = item["text"]

response = chain.invoke({"text": text})

# Extract the content from AIMessage

response_text = response.content.strip().lower()

if response_text == "yes":

print("Text discusses space flight:\n", text)

That spend a little over 12 minutes, checking 1% of the news articles to find these that discussed space exploration:

Processing articles: 24%|██▎ | 380/1610 [02:54<15:30, 1.32article/s]Text discusses space flight:

Sign up for CNN's Wonder Theory science newsletter. Explore the universe with news on fascinating discoveries, scientific advancements and more. (CNN)The early warning system to detect asteroids that pose a threat to Earth, operated by NASA and its collaborators around the world, got to flex its muscles. It successfully detected a small asteroid 6 1/2 feet (2 meters) wide just hours before it smashed into the atmosphere over the Norwegian Sea before disintegrating on Friday, March 11, according to a statement from NASA's Jet Propulsion Laboratory on Tuesday. That's too small to pose any hazard to Earth, NASA said. A still from an animation showing asteroid 2022 EB5's predicted orbit around the sun before crashing into the Earth's atmosphere on March 11.Often such tiny asteroids slip through the surveillance net, and 2022 EB5 -- as the asteroid was named -- is only the fifth of this kind to be spotted and tracked prior to impact. (Fear not, a larger asteroid would be discovered and trac...

Processing articles: 30%|███ | 490/1610 [03:44<07:55, 2.35article/s]Text discusses space flight:

Sign up for CNN's Wonder Theory science newsletter. Explore the universe with news on fascinating discoveries, scientific advancements and more. (CNN)Total lunar eclipses, a multitude of meteor showers and supermoons will light up the sky in 2022.The new year is sure to be a sky-gazer's delight with plenty of celestial events on the calendar. There is always a good chance that the International Space Station is flying overhead. And if you ever want to know what planets are visible in the morning or evening sky, check The Old Farmer's Almanac's visible planets guide.Here are the top sky events of 2022 so you can have your binoculars and telescope ready. Full moons and supermoonsRead MoreThere are 12 full moons in 2022, and two of them qualify as supermoons. This image, taken in Brazil, shows a plane passing in front of the supermoon in March 2020. Definitions of a supermoon can vary, but the term generally denotes a full moon that is brighter and closer to Earth than normal and thus app...

Processing articles: 35%|███▍ | 558/1610 [04:21<07:36, 2.31article/s]Text discusses space flight:

Story highlights Crowds hand each member of the group a red rose While secluded, the crew has few luxuries The group asks scientists to put the data it gathered to good useThe group's isolation simulates a 520-day mission to MarsSix volunteer astronauts emerged from a 'trip' to Mars on Friday, waving and grinning widely after spending 520 days in seclusion. Crowds handed each member of the group a red rose after their capsule opened at the facility in Moscow. Scientists placed the six male volunteers in isolation in 2010 to simulate a mission to Mars, part of the European Space Agency's experiment to determine challenges facing future space travelers. The six, who are between ages 27 and 38, lived in a tight space the size of six buses in a row, said Rosita Suenson, the agency's program officer for human spaceflight.During the period, the crew dressed in blue jumpsuits showered on rare occasions and survived on canned food. Messages from friends and family came with a lag based on...

Processing articles: 56%|█████▋ | 908/1610 [06:57<05:01, 2.33article/s]Text discusses space flight:

Story highlightsGene Seymour: Gene Roddenberry may have created "Star Trek," but Leonard Nimoy and character of Spock are inseparableHe says Nimoy had many other artistic endeavors, photography, directing, poetry, but he was, in the end, SpockGene Seymour is a film critic who has written about music, movies and culture for The New York Times, Newsday, Entertainment Weekly and The Washington Post. The opinions expressed in this commentary are solely those of the writer. (CNN)Everybody on the planet knows that Gene Roddenberry created Mr. Spock, the laconic, imperturbable extra-terrestrial First Officer for the Starship Enterprise. But Mr. Spock doesn't belong to Roddenberry, even though he is the grand exalted progenitor of everything that was, is, and forever will be "Star Trek."Mr. Spock belongs to Leonard Nimoy, who died Friday at age 83. And though he doesn't take Spock with him, he and Spock remain inseparable. Zachary Quinto, who plays Spock in the re-booted feature film incarnati...

Processing articles: 100%|██████████| 1610/1610 [12:31<00:00, 2.14article/s]

You can run this example with this notebook on Google Colab.

Example 5: Structing data using LangChain output parsers

We've got one avocado. We have a huge collection of recipes, where each one is a raw, unstructured text string. What can we make with one avocado?

You can use LangChain output parsers to extract structured data from raw text. Here's an example for extracting the number of avocados that each recipe requires:

class RecipeInfo(BaseModel):

title: str = Field(description="The title of the recipe")

avocado_count: float = Field(description="The number of avocados required in the recipe")

ingredients: List[str] = Field(description="The full list of ingredients")

directions: List[str] = Field(description="The list of directions to prepare the recipe")

parser = PydanticOutputParser(pydantic_object=RecipeInfo)

prompt = PromptTemplate(

template="Extract the following information from the recipe:\n{format_instructions}\n{recipe_text}\n",

input_variables=["recipe_text"],

partial_variables={"format_instructions": parser.get_format_instructions()},

)

chain = prompt | model | parser

recipe_info = chain.invoke({"recipe_text": recipe_text})

print(f"Recipe: {recipe_info.title}")

print(f"Avocados required: {recipe_info.avocado_count}")

When we call that chain with recipe_text as input, we'll get four things: The number of avocados as a floating-point number, and the title, ingredients, and directions as three separate strings. We can easily access them as attributes of the response, like: recipe_info.avocado_count and recipe_info.title.

The mechanics of telling the LLM how to respond with structured data and then parsing the response are complicated. If you're in the middle of trying to solve a problem then you don't want to stop and spend an hour on yak shaving to reinvent that wheel. When you use the PydanticOutputParser, LangChain knows how to add the formatting instructions (as the format_instructions variable) to your prompt to tell the model to respond in JSON format with the schema that you requested. Then, it handles parsing the output. You could implement that stuff yourself but you have bigger things you need to do.

Note all the JSON schema stuff it added to the prompt here:

request from application to Claude 3.5 Sonnet

response from Claude 3.5 Sonnet to the application

See the "avocado_count": 0.5? That's the important part here. The model understands to convert "1/2 ripe avocado" into a 0.5 value for that item, because LangChain generated clear formatting instructions for the model to tell it what we want. Please remember that this only works with larger, more-sophisticated models because formatting instructions consume some of the model's attention and lower its performance on the larger task. Larger models like the ones from OpenAI that have been fine-tuned for producing valid JSON and can handle it. But, if you want the best-possible accuracy then you should avoid formatting instructions in your critical prompts. Use formatting instructions in follow-up questions instead, after the delicate decisions have already been made.

LangChain provides a variety of options for output parsers, but the PydanticOutputParser is one of the most powerful becuase it supports types for the items it extracts, like the floating-point type for the number of avocados in this example.

The code for this example does a keyword scan for the word "avocado" first and then inspects the recipes it finds, looking for the ones that require one or fewer avocados:

Processing 270 recipes out of 27070 avocado recipes found.

Recipe: Halibut Veracruz

Avocados required: 2.0

Skipping this recipe. It requires too many avocados (2.0).

--------------------------------------------------

Recipe: Blt Spinach Wrap Recipe

Avocados required: 2.0

Skipping this recipe. It requires too many avocados (2.0).

--------------------------------------------------

Recipe: Chicken And Avocado Salad With Lime And Cilantro

Avocados required: 2.0

Skipping this recipe. It requires too many avocados (2.0).

--------------------------------------------------

Recipe: Crunchy Quesadilla Stack

Avocados required: 1.0

Great! We can make this recipe with our one avocado.

Title:

Crunchy Quesadilla Stack

Ingredients:

- 1 -2 cup shredded cooked chicken (amt to your liking)

- 1 (10 ounce) can Rotel diced tomatoes

- 1 cup shredded cheddar cheese

- 1 small avocado, chopped

- 14 cup sliced green onion (about 2 onions)

- 1 teaspoon garlic salt

- 10 flour tortillas

- 1 (16 ounce) can refried beans

- 5 corn tostadas, fried

- cooking spray

- olive

- jalapeno pepper

- green chili

- bell pepper

Directions:

- Combine chicken, tomatoes, cheese, avacado, green onions, and garlic salt.

- Mix well.

- Spread about 1/3 cup of beans to edge of tortillas.

- Top each with tostada shell.

- Spoon chicken mixture over shell; top with remaining flour tortillas.

- Grill both sides over medium heat in skillet coated with cooking spray until golden brown.

- Cut into wedges.

- Serve with sour cream, salsa, etc.

--------------------------------------------------

Recipe: Southwest Chicken Chopped Salad

Avocados required: 2.0

Skipping this recipe. It requires too many avocados (2.0).

--------------------------------------------------

...

You can run this example with this notebook on Google Colab.

Focus on your own project

These examples aim to demonstrate that LangChain can enable you to do big things without a lot of code. It can handle details that you don't want to think about when you're solving real problems under pressure. It's reliable and actively supported, and it doesn't require you to change how you do things. We use it a lot, and we think it could help you, too.