Maximize Profit, Not Intelligence

Picture this: you're at the helm of a startup, brimming with ideas, ready to leverage the marvels of AI, particularly the genius of GPT 4. You set out looking for opportunities in text classifiers:0 the kind of thing you might use to moderate posts on social media, route customers in a phone maze, direct customer emails to support agents, rate transcripts of call center recordings, etc. Sounds straightforward, but it's tricky. Language is complex.

You went out and did your business development magic and worked out a deal! You can make a profitable venture if you can make an automation that can classify sentences as being about Spanish immigration law or not about Spanish immigration law, with at least a 95% accuracy, at a price of less than $200 per million classifications.

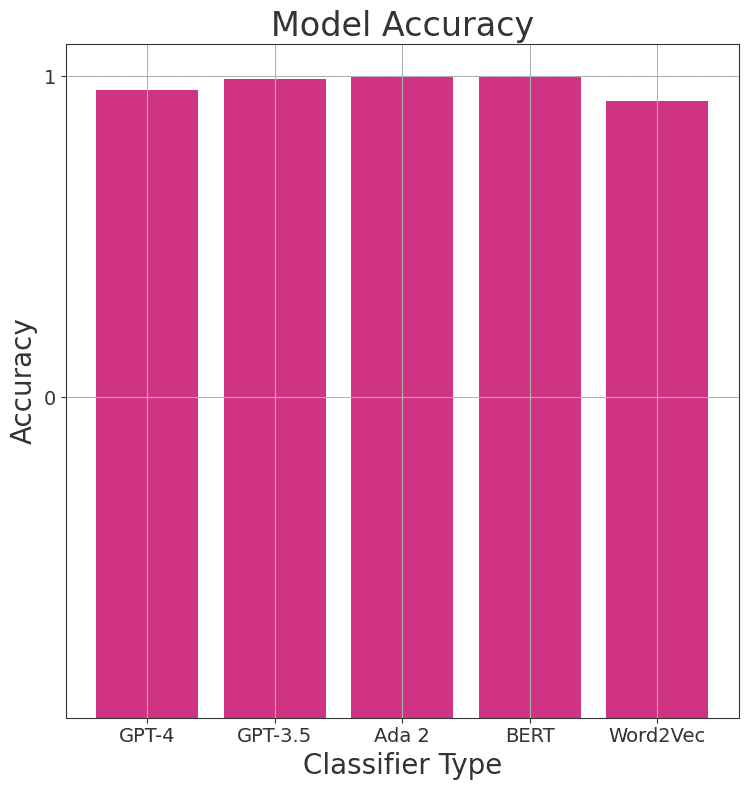

No problem! GPT 4's function-calling feature can generate structured responses! You jump into VS Code and use GPT 4 in GitHub Copilot to craft a text classifier based on GPT 4, and it's 97% accurate when you evaluate it. AWESOME, it works!

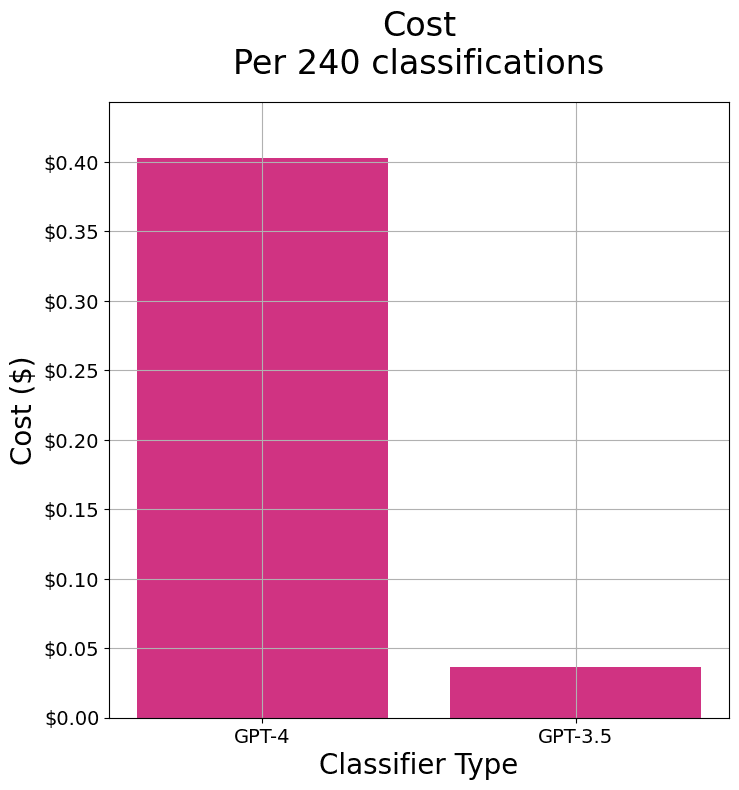

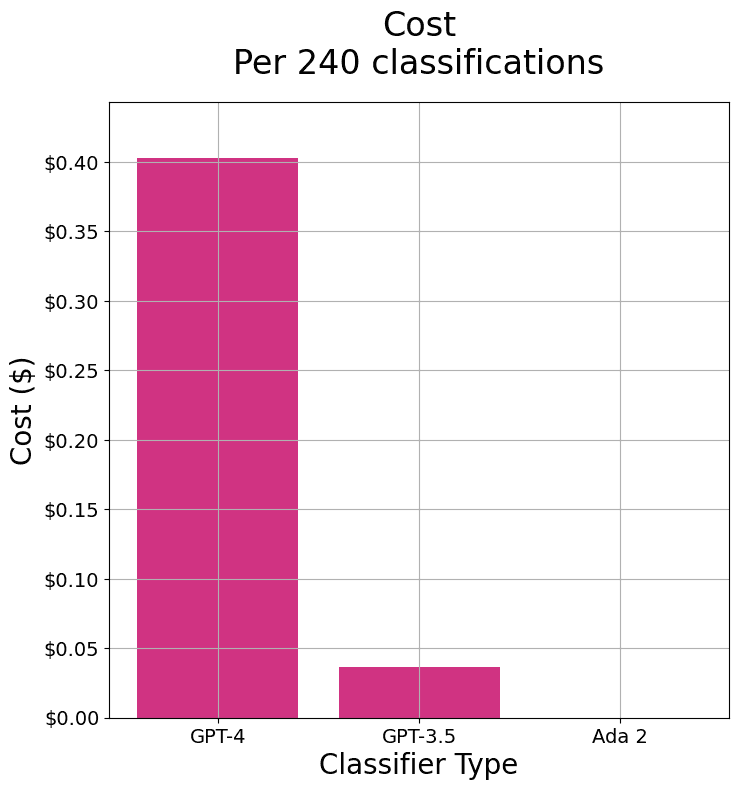

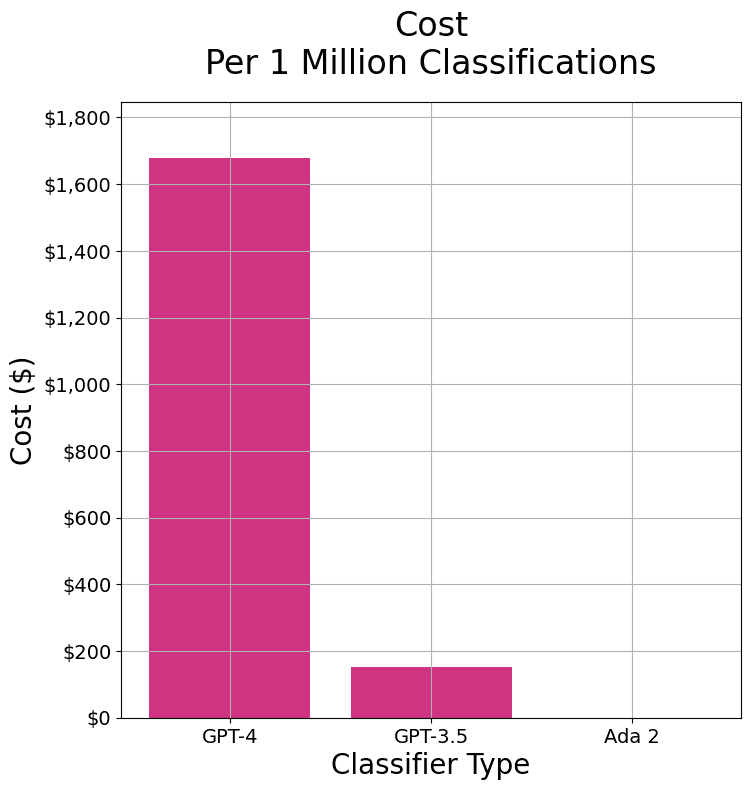

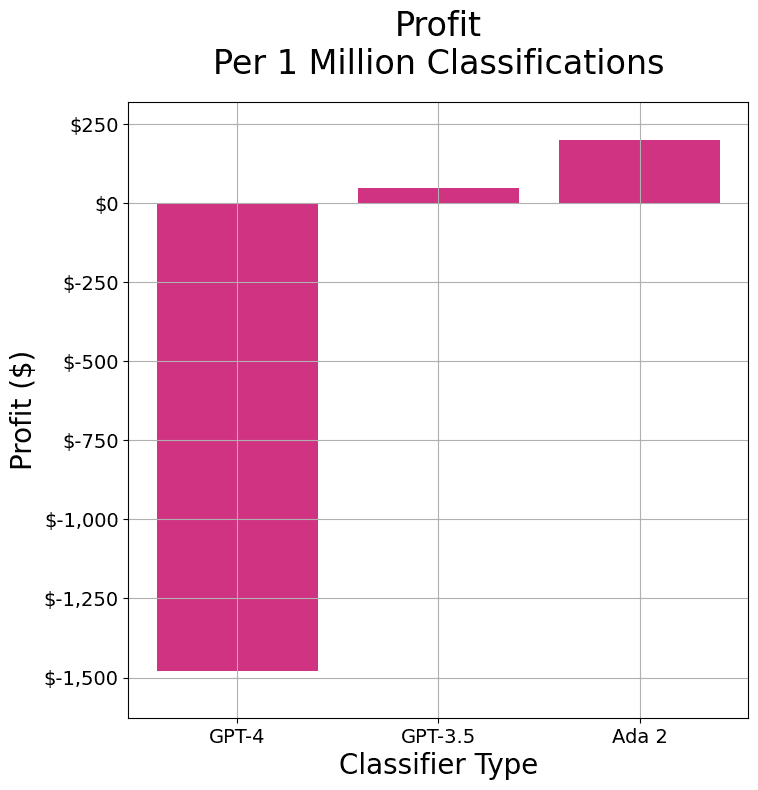

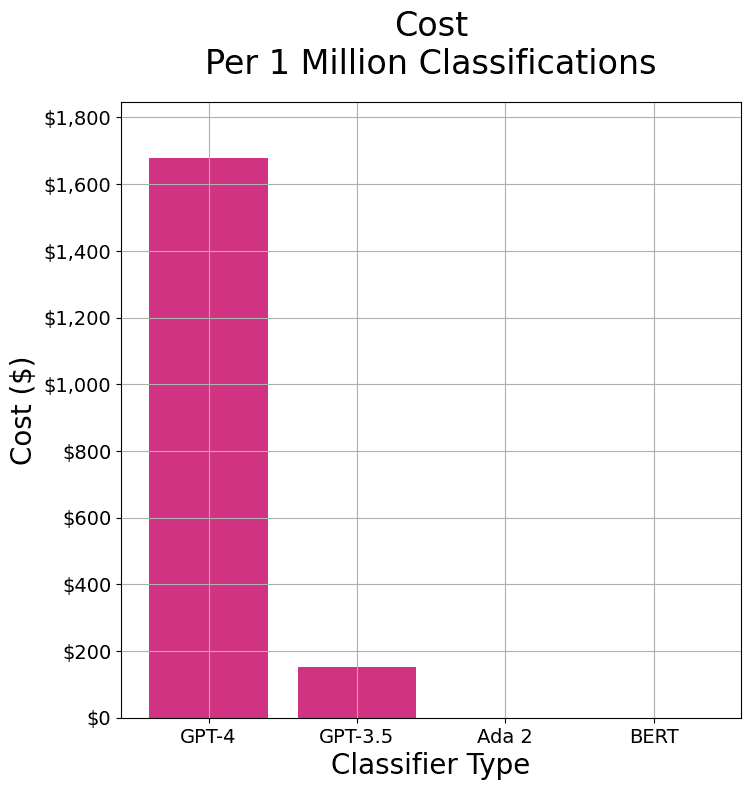

But there's a problem: You check the costs on your evaluation run, and wow, GPT 4 tokens are expensive! It costs just over $0.40 to do 240 classifications, which means that it would cost... almost $1,700 per million classifications. The venture would not be profitable.

This is the story of 2023: We have amazing new tools, but there's a difference between a cool proof-of-concept demo and a viable business venture. Now, as 2024 unfolds, the narrative shifts from mere intelligence to profitability. Once you find a use case with business value and prove the concept, you can maximize profit by reducing your costs, by finding the least-sophisticated model that will suit your task.

It's kind of like how you set the optimal price: It's the maximum price that the market will bear. In AI applications, the path to profitability is to use the dumbest model that the problem will bear.

Make it cheaper

One easy way to make a GPT 4 solution cheaper is to use GPT 3.5 instead. It's a really good model. And it's a great value compared to GPT 4, which is amazing but expensive.

You make a simple tweak to your code to swap the models and you do your evaluation run again to check the cost. It's much better! GPT 3.5 is an order of magnitude cheaper:

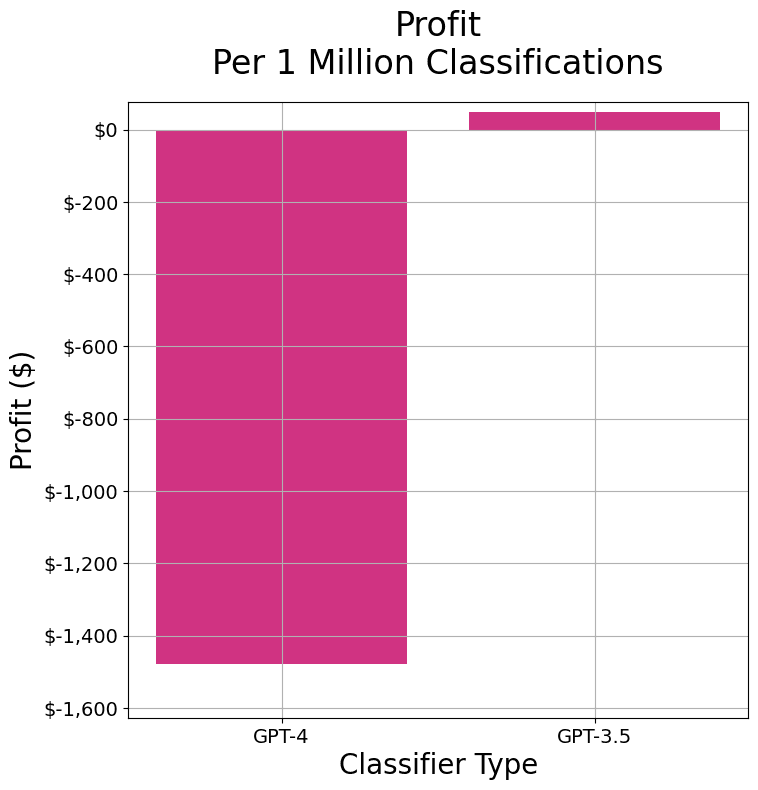

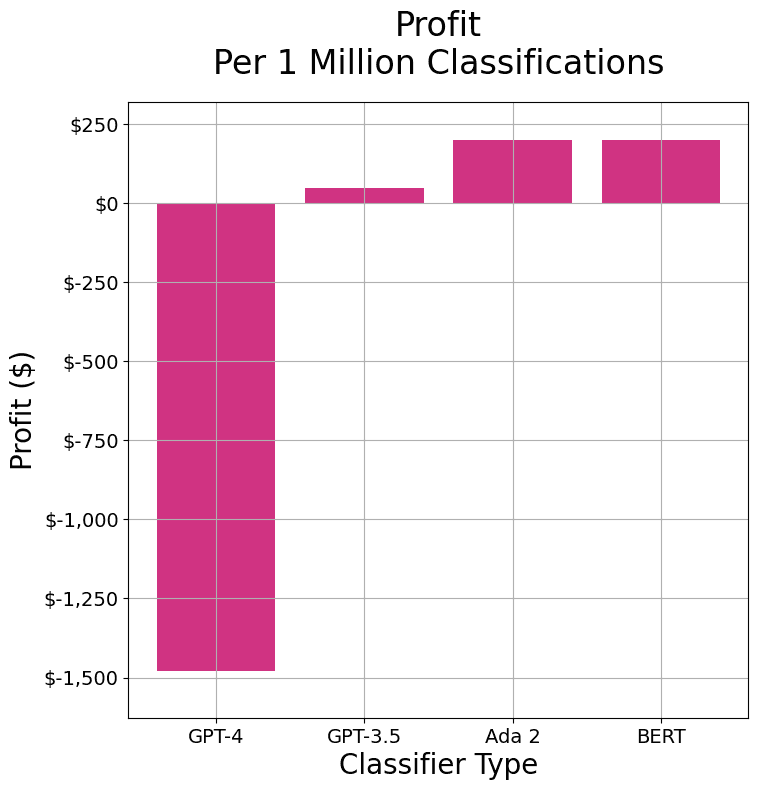

The best part here is that the cost per million will be below your break-even point of $200 per million. You're profitable! Yay!

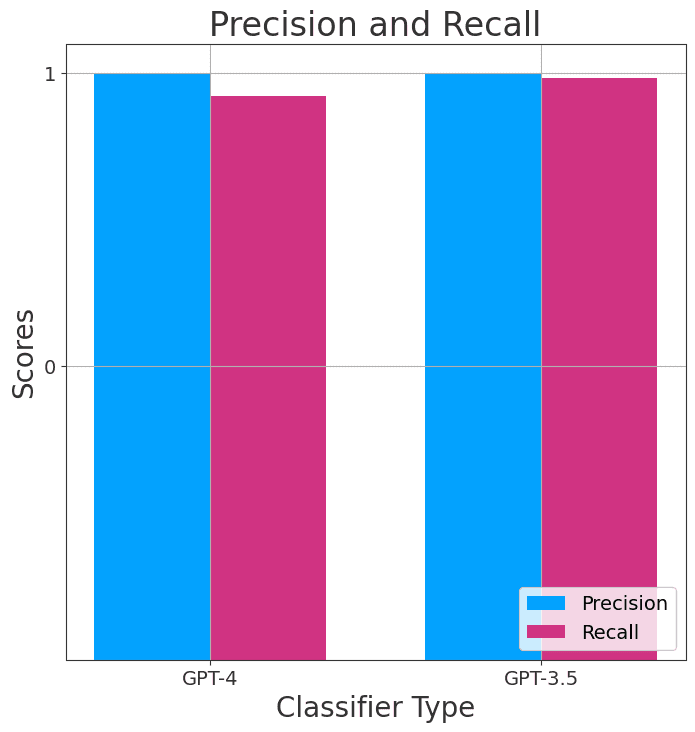

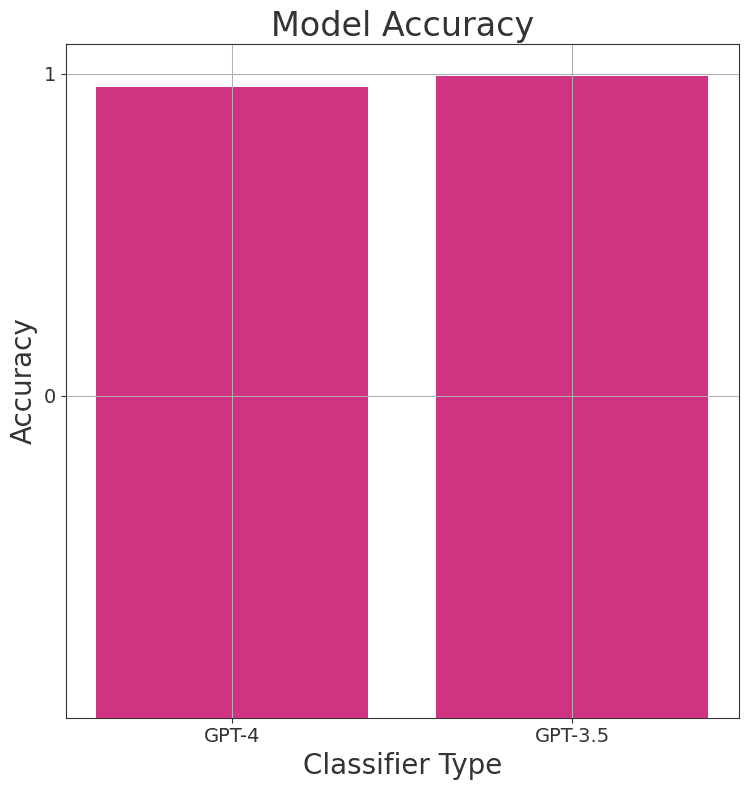

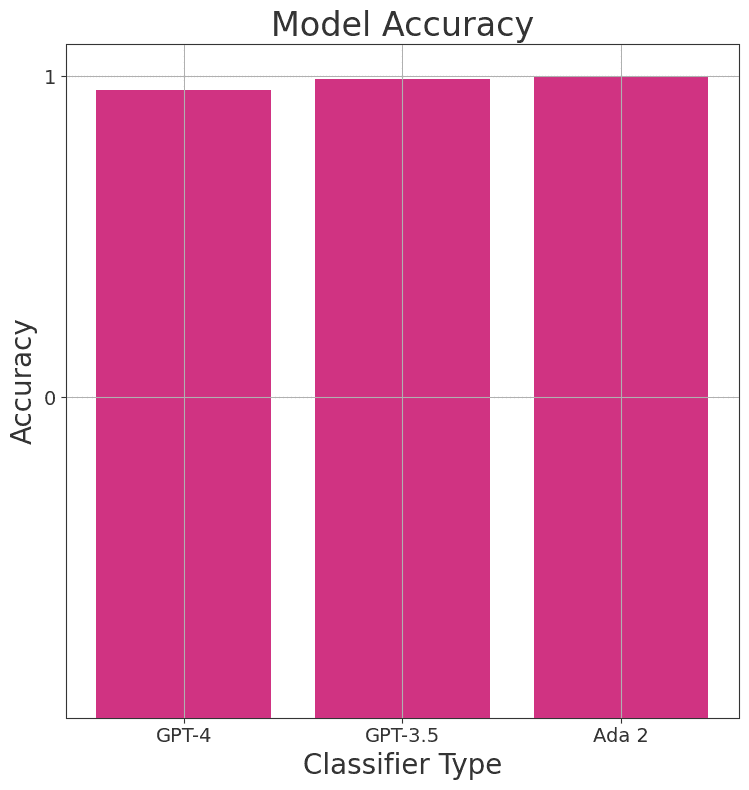

But is it as accurate? Yes, it's about the same accuracy. It's enough. In the evaluation phase you're measuring things like precision and recall and accuracy, and you get about the same results.

The accuracy score is actually a little higher for GPT 3.5 but that might be related to the training data that was generated with GPT 3.5.

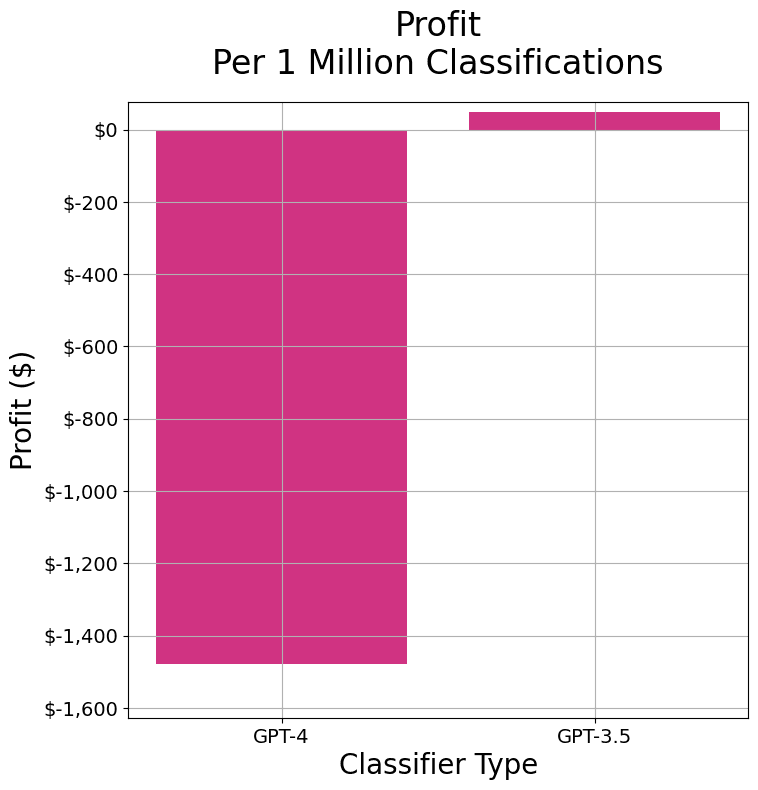

Now you have a profitable venture. But your margins are razor thin:

You want to optimize for profit, right? And in software we optimize through iteration. Let's try to cut costs.

Machine-learning text classifiers

This kind of algorithm that you're selling at a profit is called a "classifier", and there are a lot of ways to make them,0 including ways that don't use large language models. Let's look into making a classifier with machine learning.

One way to do it is to use a different model from OpenAI that isn't a generative text model. It's an embeddings model, for turning text into strings of numbers.0 There are similar embeddings models from other companies, like AWS0.

You can use those numbers to classify the text in any way that you want if you have a lot of examples that you can use for finding patterns in the data. Here's how:

Step One: Crafting Numerical Fingerprints

Computers don't understand words, only numbers. To process words with computers we have to convert them to numbers. You could just assign every word a number, "cat" is 1, "dog" is 2, etc. But there are more useful ways.

You can take a sentence and transform it into a numerical pattern, an 'embedding'. Imagine these embeddings as secret codes that capture the essence of each sentence numerically.0 We have tools that measure how 'similar' these embeddings are, assigning a number to represent the degree of resemblance between any two sentences.0 So, the difference between "cat" and "dog" might be less than the distance between "cat" and "suspension bridge".

While embeddings offer rich, condensed representations of text, they don't inherently categorize sentences. How can we build a classifier out of this?

Step Two: The Learning Machine

Enter the machine-learning model. Its job is to learn from examples - to recognize which numerical patterns correspond to our topic of interest. By examining known questions about immigration law and comparing them to unrelated sentences, the model learns to identify a benchmark for similarity. It's akin to teaching someone to recognize a specific tune among various songs.

For that, we used logistic regression, a statistical method that's like a skilled goldsmith, discerning which nuggets of text match our sought-after criteria. It operates on a simple yet powerful principle: calculating the likelihood that a new sentence belongs to one of two categories – a binary decision of 'yes' or 'no'.0

Picture logistic regression as a finely-tuned scale. It weighs the unique numerical fingerprints of words – our carefully crafted vector embeddings – and decides how 'heavy' they are in terms of relevance to our categories. By adjusting this scale, logistic regression finds the precise balance point, the exact threshold of similarity, that tips the scale towards a 'yes' or a 'no'. This isn't just a guessing game; it's a calculated decision based on the distinct patterns and relationships our embeddings reveal.

It's a simple0 yet effective tool in our arsenal, designed to take an array of "features" as inputs and produce a binary yes/no classification. That's exactly what we need: We need a model that takes a string of numbers from the vector embeddings and produces a prediction about whether a given embedding represents a sentence about Spanish immigration law.

Method

We generated a list of about 1,200 fake sentences using GPT 3.5, where each is labeled yes or no: Does the sentence represent a question about Spanish immigration law? We asked for sentences in English, Spanish and Spanglish. You can see those in the data/labeled.csv file in the GitHub repository for the experiment.

We used the Python notebook in the repository to load that data and generate an embedding for each sentence in the file using the OpenAI Ada 2 embeddings model. How did it perform?

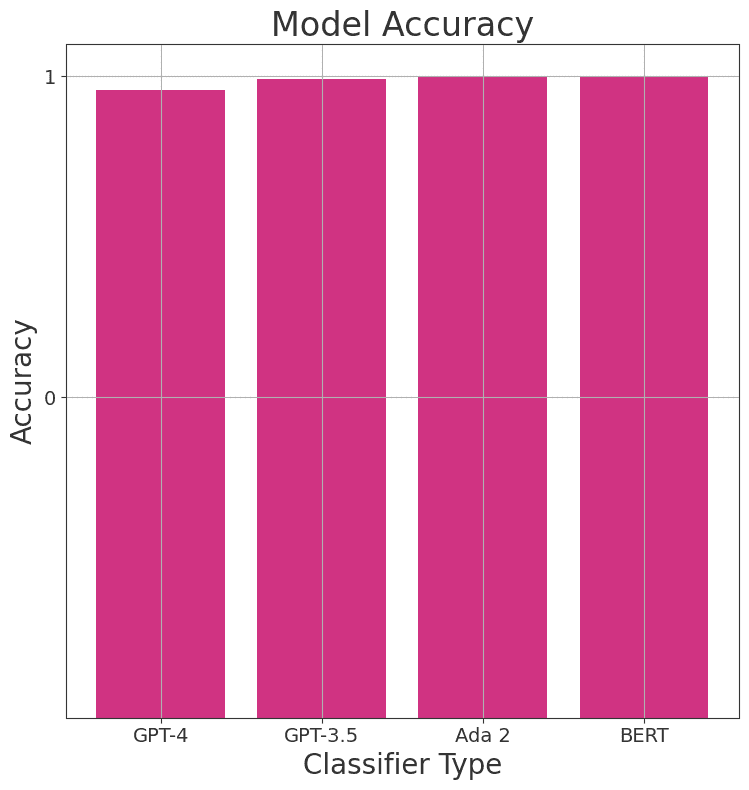

The accuracy improved! Amazing!

Cost reduction

Even more amazing is the order-of-magnitude cost reduction. The Ada 2 model only costs $0.0001 / 1K tokens for input and none for output, compared with GPT 3.5 which costs $0.0010 / 1K tokens for input and $0.0020 / 1K tokens for output. The cost has dropped so low that you can't even see it on the visualization next to the cost of GPT 4.

Wow! Let's scale that to a million classifications:

And we know why that's important. An order-of-magnitude increase in profit:

Speed improvement

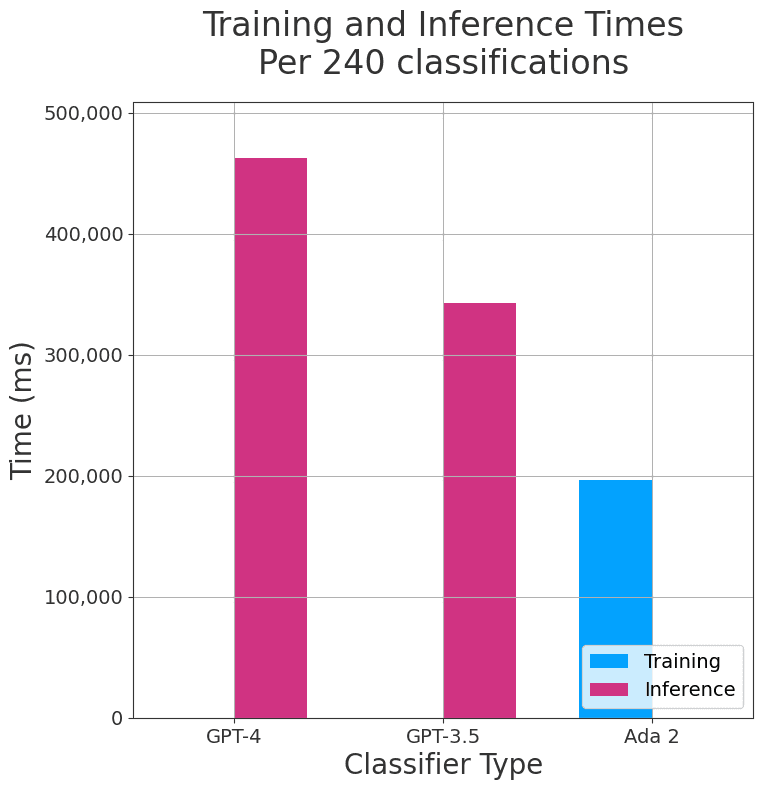

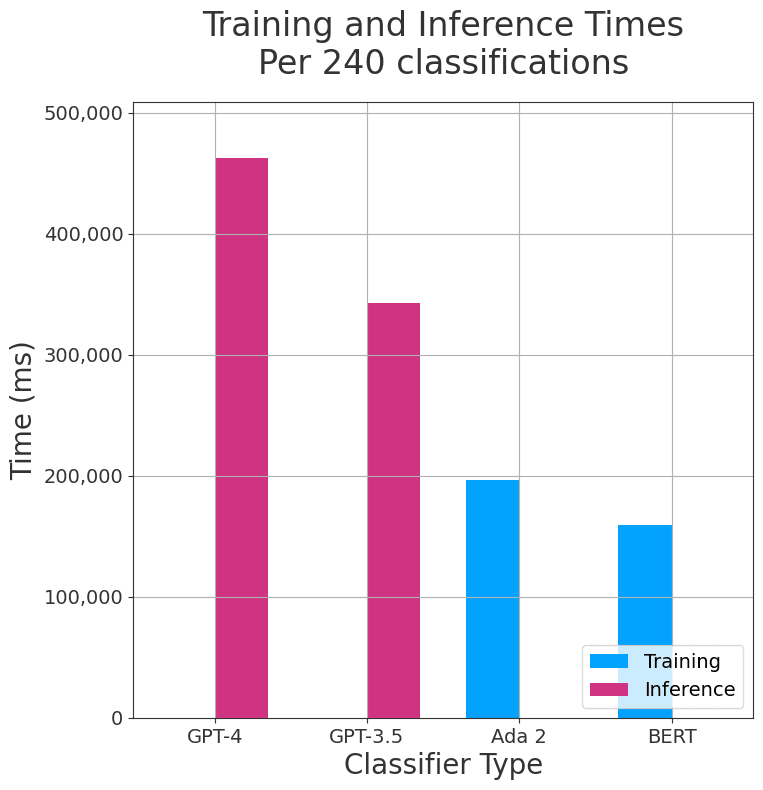

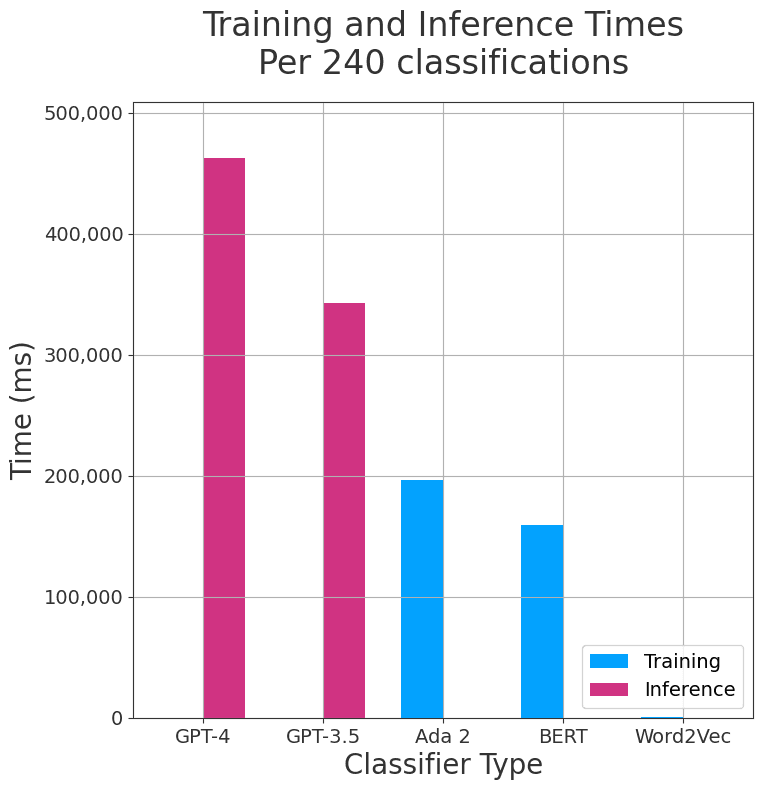

Another incredible thing is how fast machine-learning classifiers are, compared with LLMs that generate responses one token at a time. A machine-learning classifier shifts the time required to a one-time training step, and they're virtually instant at inference time. GPT 3.5 and GPT 4 don't require any training since they're "pre-trained", but they're super slow when they generate text compared to a machine-learning classifier when the job at hand is text classification.

More!

Can we make it even cheaper? Can we eliminate the OpenAI bill entirely?

Yes, we can. OpenAI doesn't have a monopoly on vector embeddings. There are lots of ways to do it. We could use AWS, but currently the pricing of the Titan Embeddings model is the same as the price of the OpenAI Ada 2 model, so that won't help us with costs.

But there are algorithms we can run ourselves. One of them is BERT.

BERT stands for Bidirectional Encoder Representations from Transformers. It revolutionized the understanding of context in language models by reading the text in both directions and building a deep context understanding.0 BERT processes words in relation to all the other words in a sentence, rather than one-by-one in order. It's a common embeddings model, so let's try it.

Accuracy

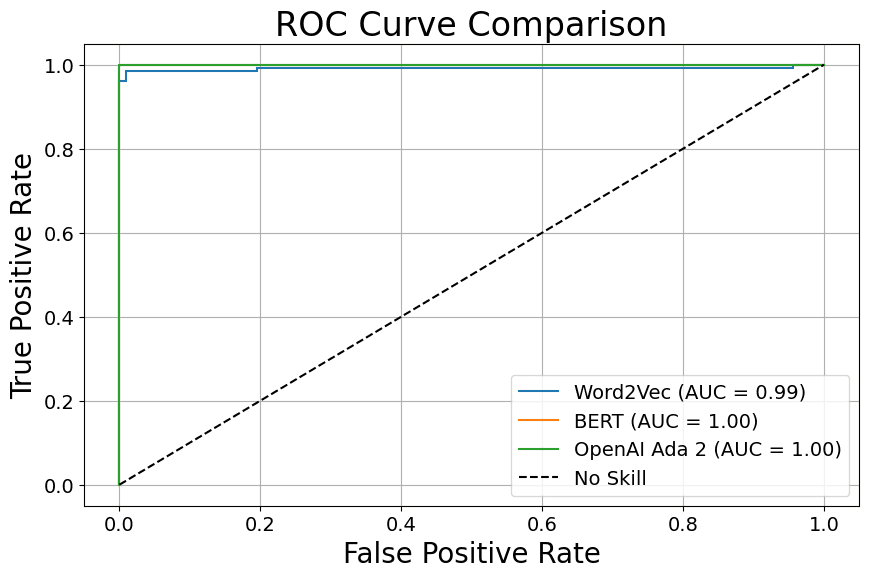

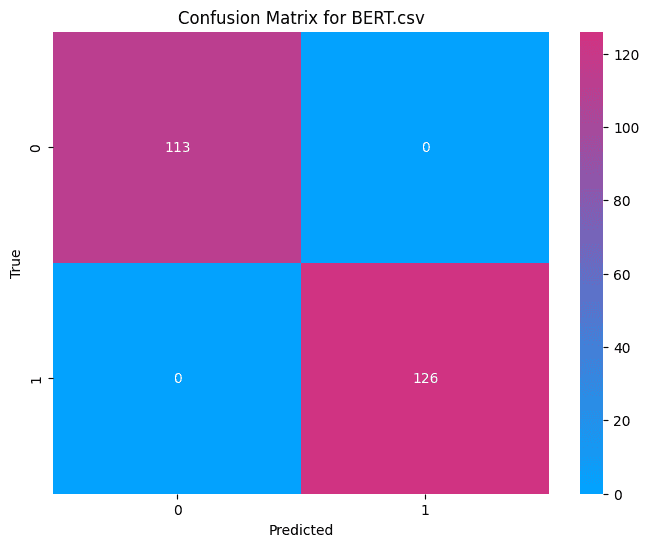

BERT does just as well as Ada 2 at this task, despite being free. Good to know!

Speed

Computing the embeddings using BERT took about the same amount of time as it took to outsource the job to OpenAI. It took a little less time.

And, like the model based on OpenAI embeddings, it's virtually instant to compute classifications with it. All the time is in a one-time training step.

Cost

This classifier completely eliminates the OpenAI bill. Which was already so small with Ada 2 that you can't even see it on this visualization compared with the cost of GPT 4 and GPT 3.5. But now the cost is zero.

Profit

And that increases our profit! Our key performance indicator!

More!

We can't really improve the price of "free".

And we can't improve on a perfect accuracy score.

What can we improve, training time? Sure, okay, let's try Word2Vec since it's simpler than BERT. Maybe it will be faster.

Word2Vec is a group of models that produce word embeddings by capturing the context of a word in a document.0 It leverages neural networks and operates on the principle that words appearing in similar contexts have similar meanings.

Is it faster? Yes! It's so much faster at computing embeddings that you can't even see the blip on this visualization, next to the times that the other models require. There's a tiny bar there on the bottom right if you squint.

Does it work, though?

Oops. No, it's significantly less accurate, at 97%. That's accurate enough for our requirements, but it doesn't seem worth the drop in accuracy to save a few seconds in a one-time pre-training step. It won't significantly boost our profit, and that's what matters.

Okay, let's stop while we're ahead and go with BERT. You can't know until you try it. Now we know.

Our goal is to use the dumbest model that the problem will bear. To find that point, we have to go all the way to the point where we find a model that's just not good enough. We have found that point. It's just like finding the market price for something: You increase the price until the market shows you that the price is too high, and then you lower it to the point where you get sales. We can do the same thing with AI solutions to find the best value.

Try it yourself

Don't take our word for it: you can try it for yourself for free. It's amazing that you can train and run the classifiers from a free Google Colab notebook. To use it, just go there and set up two things:

- Use the "Download raw file" feature in GitHub to get the data file and then upload that as

labeled.csvto the Colab environment. - Set a secret0 called

openaiand set the value to your OpenAI API token. For calling the Ada 2 API.

You can also clone the GitHub repository if you want to run it in Sagemaker or on your own machine or whatever. It should run just about anywhere, with no special GPU power or anything.

You can also see an example of a text classifier built using function calling here in this Colab notebook or on GitHub.

Evaluation metrics

We want to see how well the three different embeddings techniques work for our classifier. We used these metrics:

Accuracy: This is the simplest measure, telling us the proportion of total predictions our model got right.0 It's a quick way to gauge overall effectiveness.

Precision and Recall: These metrics offer a more nuanced view. Precision shows us how many of the sentences identified as questions about Spanish immigration law were actually so. Recall, on the other hand, tells us how many of the actual legal questions our model successfully identified.0

ROC-AUC Score: This metric helps us understand the trade-offs between true positive rate and false positive rate.0 The AUC (Area Under the Curve) quantifies the model's ability to distinguish between classes - a higher AUC means better discrimination.

Confusion Matrices: These are tables that lay out the successes and failures of our predictions in detail. They show four types of outcomes - true positives (correctly identified legal questions), false positives (non-legal questions wrongly identified as legal), true negatives (correctly identified non-legal questions), and false negatives (legal questions missed by the model).0 This matrix helps us pinpoint areas where our model might be overconfident or too cautious, guiding us in fine-tuning its performance.

Maximize profit, not intelligence

You can do amazing things with OpenAI models but you don't always need them. BERT is a widely-available, open algorithm that's so cheap and easy to run that you can compute 1,500 sentence embeddings from a free Google Colab notebook in a minute or two. And it performs just as well as the OpenAI embeddings that you have to pay for. Word2Vec embeddings, even cheaper to compute since the process is less computationally intense, also perform really well. Not good enough for this example, but good enough for lots of things.

As we analyze these results, a broader business lesson emerges. In the AI arena, more powerful doesn't always mean more valuable. Just like our experiment, where BERT held its ground against mightier models, businesses need to gauge the cost-effectiveness of AI solutions. With just a couple of iterations we turned a non-viable proof-of-concept into a viable business and then improved the profit by orders of magnitude. The profit is the thing that matters, and that's what our iterations need to focus on.