TextGrad

In the paper TextGrad: Automatic “Differentiation” via Text, researchers from Stanford introduce a framework for automatic differentiation via text, for LLMs.

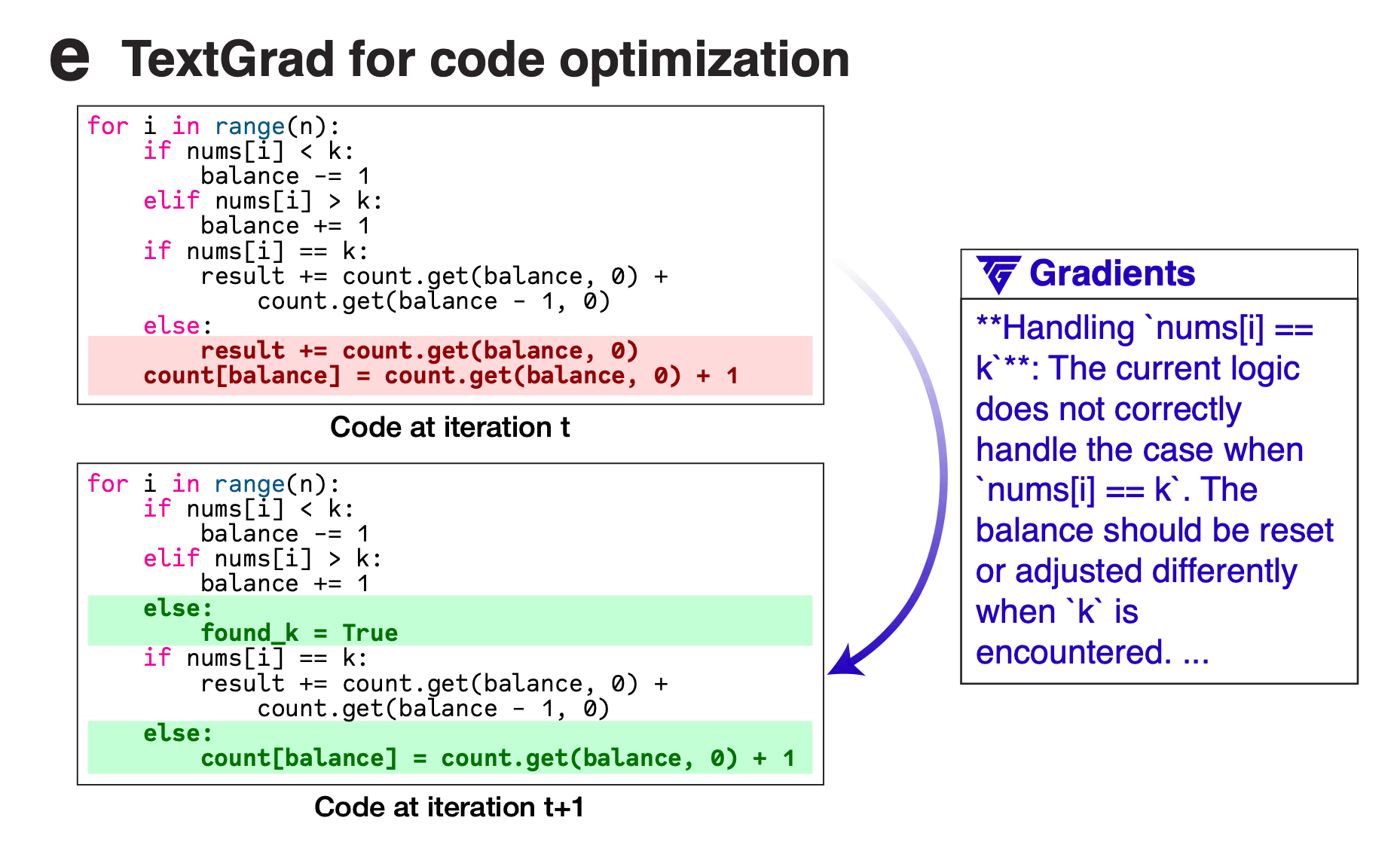

This overall idea is the key to automating prompt engineering. To automate prompt engineering, you need a way to test your prompt and figure out what's wrong with it and then apply that idea to editing the prompt. TextGrad is about figuring out what's wrong.

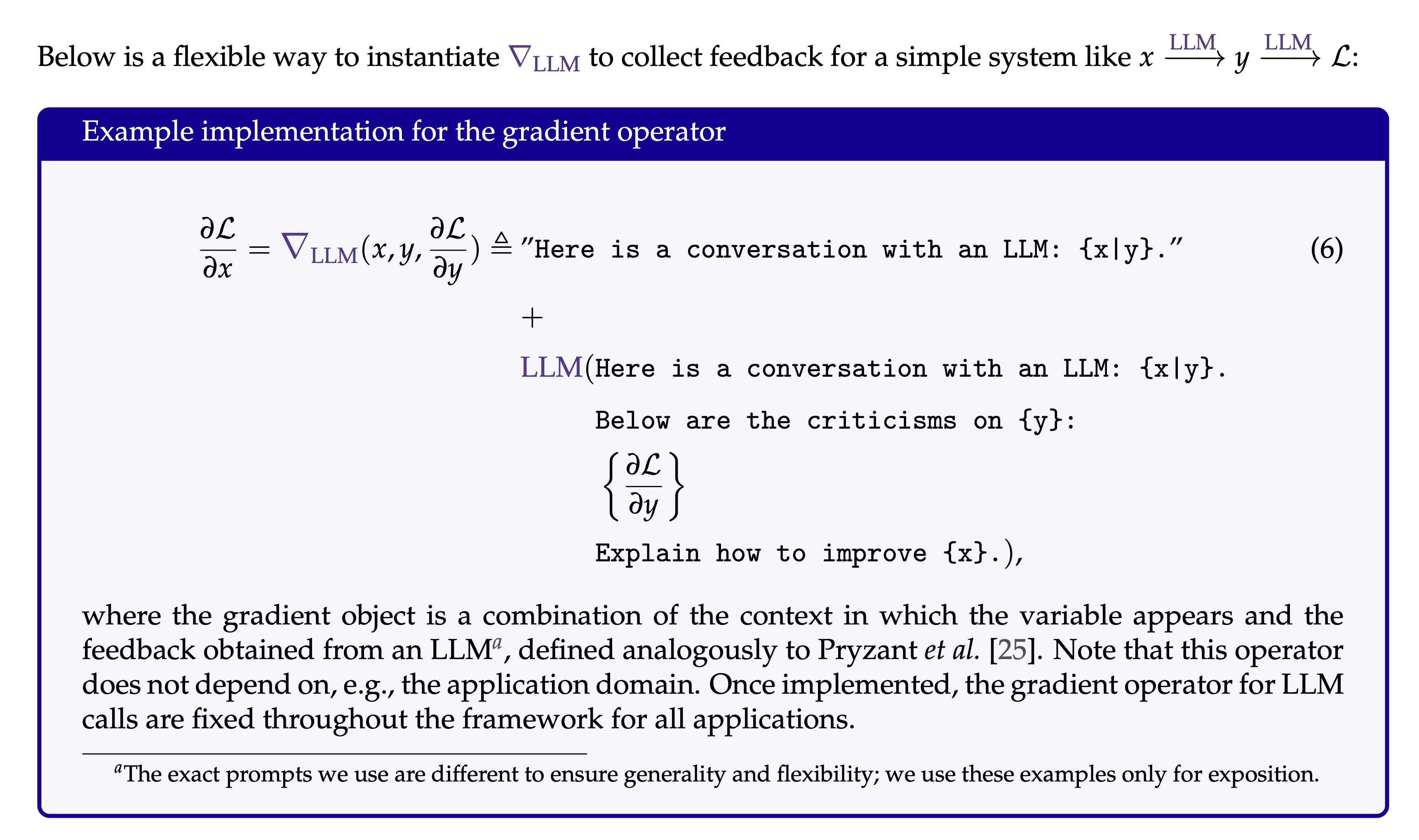

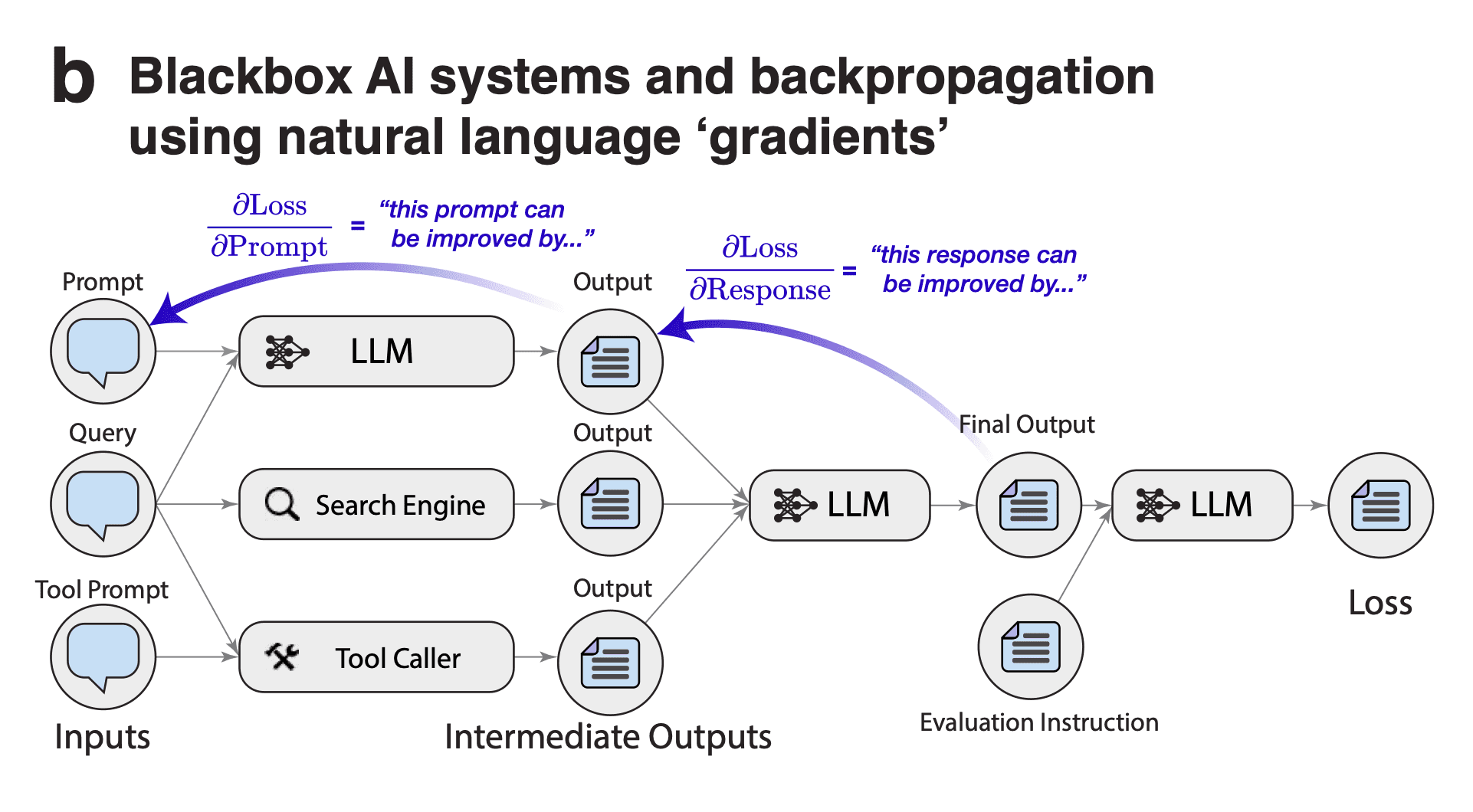

It operates in an agentic paradigm, where each node in an execution graph can be an LLM request, or maybe some other thing. For the LLM requests, it establishes sort of the equivalent of a 'loss function' in machine learning, for comparing how close the output at that stage was to the ideal completion.

Then it propagates feedback backwards through the agentic graph to the beginning, to give the entire agentic system feedback for how to improve the prompts.